Articles

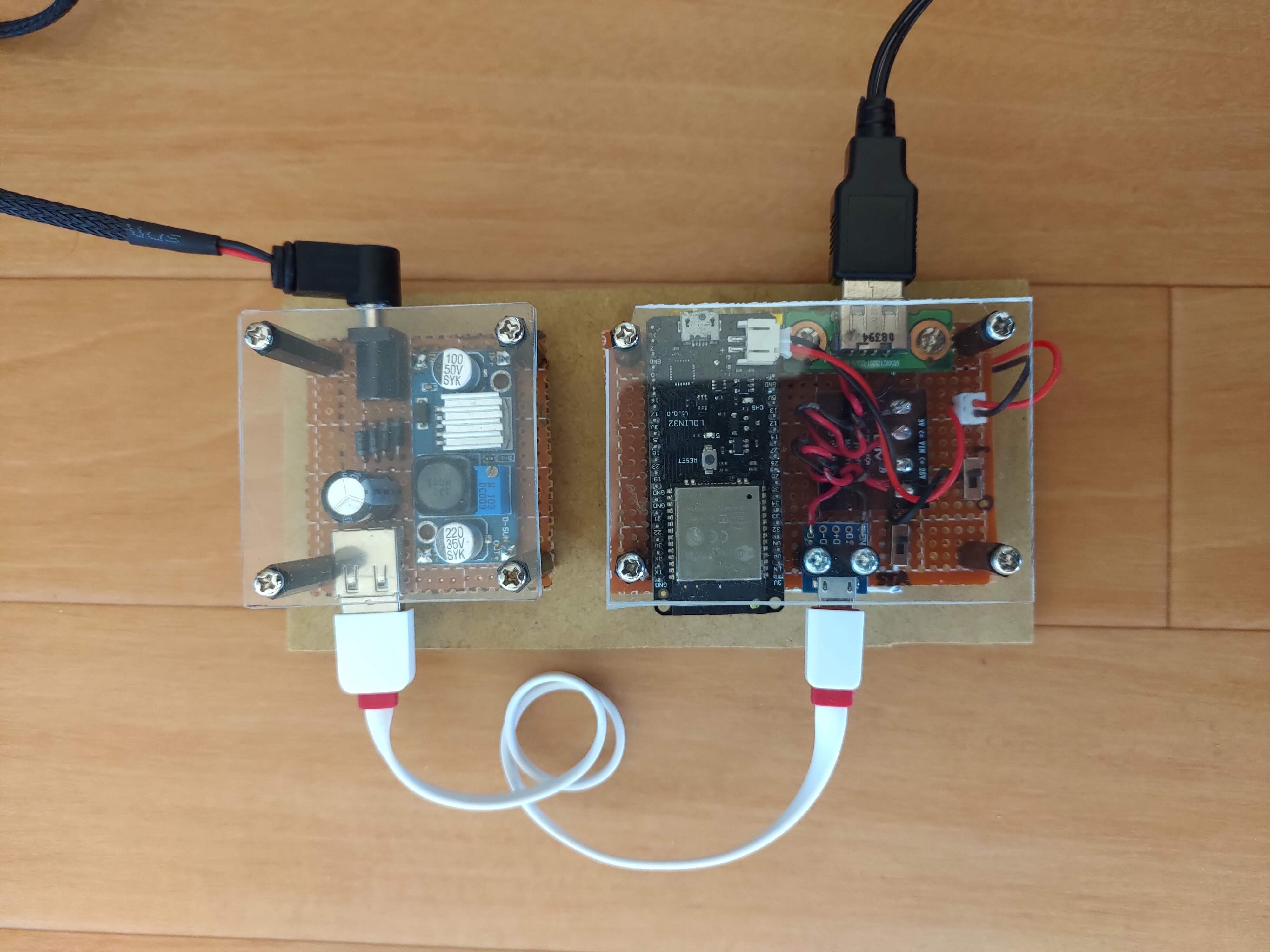

Energy transformation demonstration

Earlier this year, a friend of mine was organizing an event to promote eco-friendly behaviors. She wanted someone to talk about our relationship with energy and asked me to give a presentation on the topic, which I agreed to. I wanted the presentation to be entertaining so I designed a set of hardware demonstrations aimed at explaining physical concepts related to energy.

RFID wristband

RFID tags are great but often easy to lose. To solve this problem, I designed this case for an RFID tag, which can be worn on one's wrist using a standard watch strap. The case was designed using Fusion360 and then 3D printed.

Deploy a Neo4J instance in Kubernetes

Using this manifest, a Neo4J instance can be deployed in a Kubernetes cluster

Loading an Neo4J 3.X database in a Neo4J 4.X instance

Importing data from a Neo4J v3 database into a v4 one can be a hassle. Here are the steps to achieve the migration.

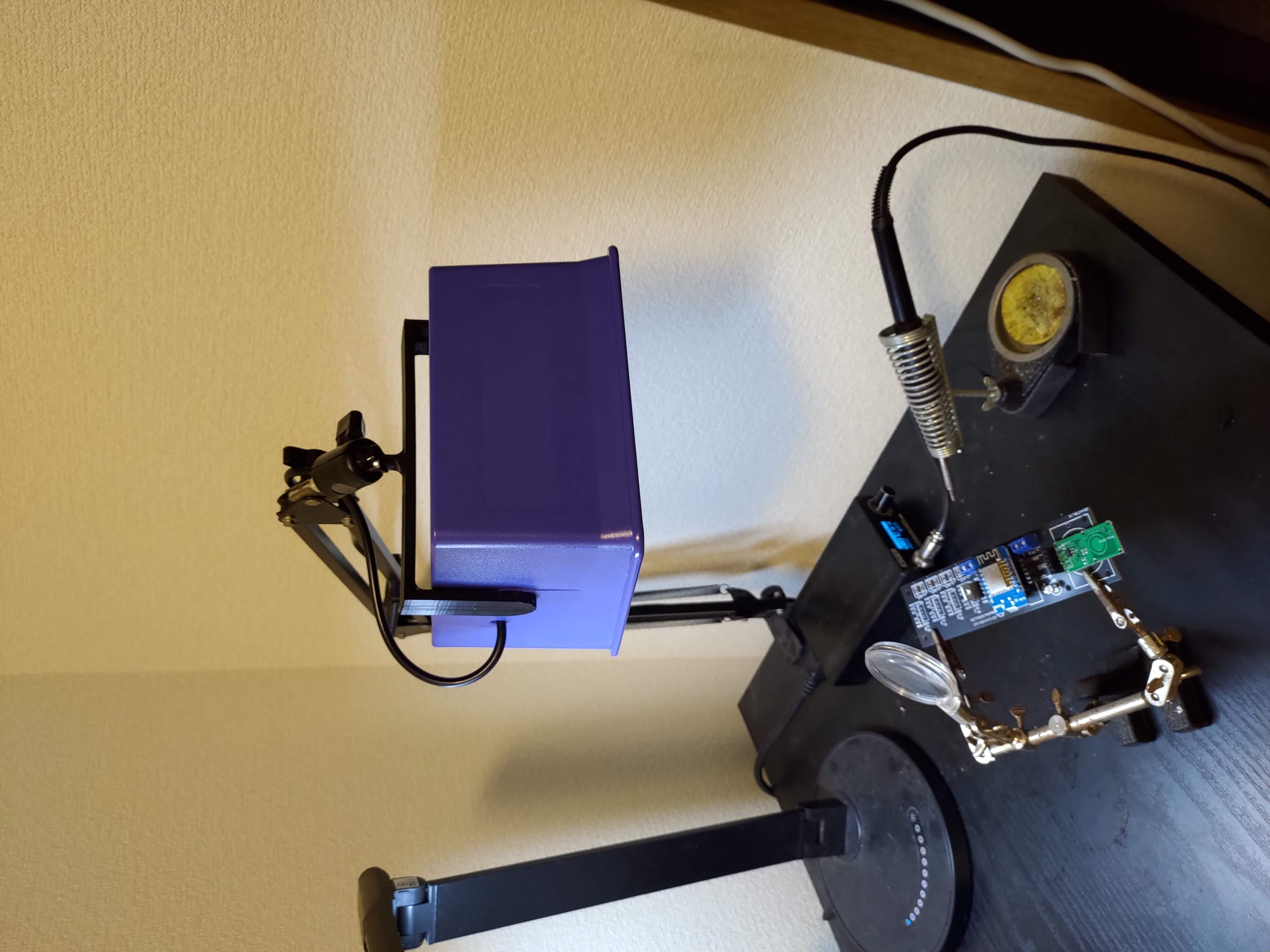

3D printed bracket for fume extractor

I got myself a fume extractor but it takes a rather large amount of real-estate on my desk. I decided to mount it on an arm so that I could easily move it out of the way so I designed and 3D printed an attachment bracket for it.

RESTful API design

There are four main kind of operations when working with a database: Create, Read, Update and Delete (CRUD). However, databases are usually not exposed directly to the clients. Instead, those operations are performed by a server side application with a client-facing API. The most common type of APIs nowadays are based on HTTP. An HTTP API can be built with complete freedom. However, guidelines for best-practice HTTP API design have been created. An API following those guidlines is called a REST (or RESTful) API.

Self-hosted GitLab instance for DevOps on Ubuntu 18.04

GitLab provides a great number of tools needed for the DevOps cycle of an application. In this guide, we'll install a GitLab instance on our own server and configure it to fit our DevOps needs. Here, we will use a fresh install of Ubuntu 18.04 as a base.

Deploying a TensorFlow model on a Jetson Nano using TensorFlow serving and K3s

The Nvidia Jetson Nano constitutes a low cost platform for AI applications, ideal for edge computing.However, due to the architecture of its CPU, deploying applications to the SBC can be challenging. In this guide, we'll install and configure K3s, a lightweight kubernetes distribution made specifically for edge devices. Once done we'll build and deploy an TensorFlow model in the K3s cluster.

Containerization of a Flask application

Flask can be seen as the equivalent of Express for Python. However, although an express application is basically production ready, a Flask app outputs the following when executed by itself:

Deployment of a TensorFlow model to Kubernetes

Let’s imagine that you’ve just finished training your new TensorFlow model and want to start using it in your application(s). One obvious way to do so is to simply import it in the source code of every application that uses it. However, it might be more versatile to keep your model in one place as standalone and simply have applications exchange data with it through API calls. This article will go through the steps of building such a system and deploy the result to Kubernetes.